@ShahidNShah

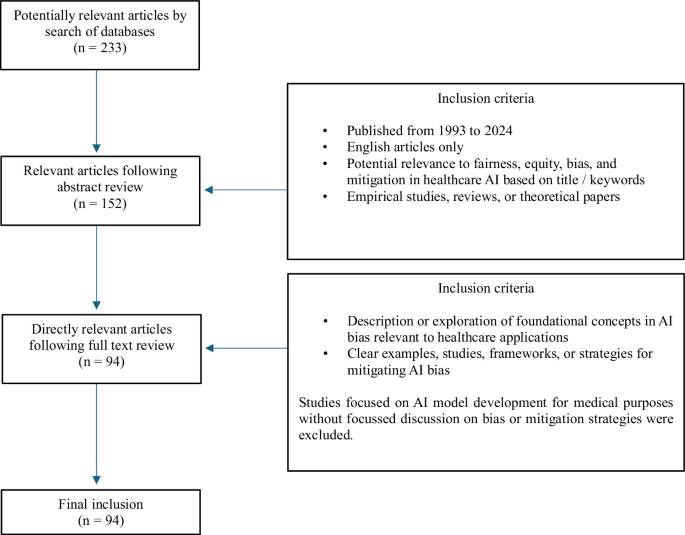

Artificial intelligence (AI) is delivering value across all aspects of clinical practice. However, bias may exacerbate healthcare disparities. This review examines the origins of bias in healthcare AI, strategies for mitigation, and responsibilities of relevant stakeholders towards achieving fair and equitable use. They highlight the importance of systematically identifying bias and engaging relevant mitigation activities throughout the AI model lifecycle, from model conception through to deployment and longitudinal surveillance.As of May 13, 2024, the Food and Drug Administration (FDA) update indicated an unprecedented surge in the approval of AI-enabled Medical Devices, listing 191 new entries while reaching a total of 882, predominantly in the field of radiology (76%), followed by cardiology (10%) and neurology (4%).

The dominant origin of biases observed in healthcare AI are human. While rarely introduced deliberately, these reflect historic or prevalent human perceptions, assumptions, or preferences that can manifest across various future stages of AI model development, potentially with profound impact. For example, data collection activities influenced by human bias can lead to the training of algorithms that replicate historical healthcare inequalities, leading to cycled reinforcement where past injustices are perpetuated into future practice.

Continue reading at nature.com

Medical imaging has traditionally focused on creating static visual representations of a patient's internal anatomy. This practice is essential for detecting conditions, guiding treatments and …

Connecting innovation decision makers to authoritative information, institutions, people and insights.

Medigy accurately delivers healthcare and technology information, news and insight from around the world.

Medigy surfaces the world's best crowdsourced health tech offerings with social interactions and peer reviews.

© 2025 Netspective Foundation, Inc. All Rights Reserved.

Built on Apr 17, 2025 at 6:07am